New Dataset for multimodal Music Emotion Recognition

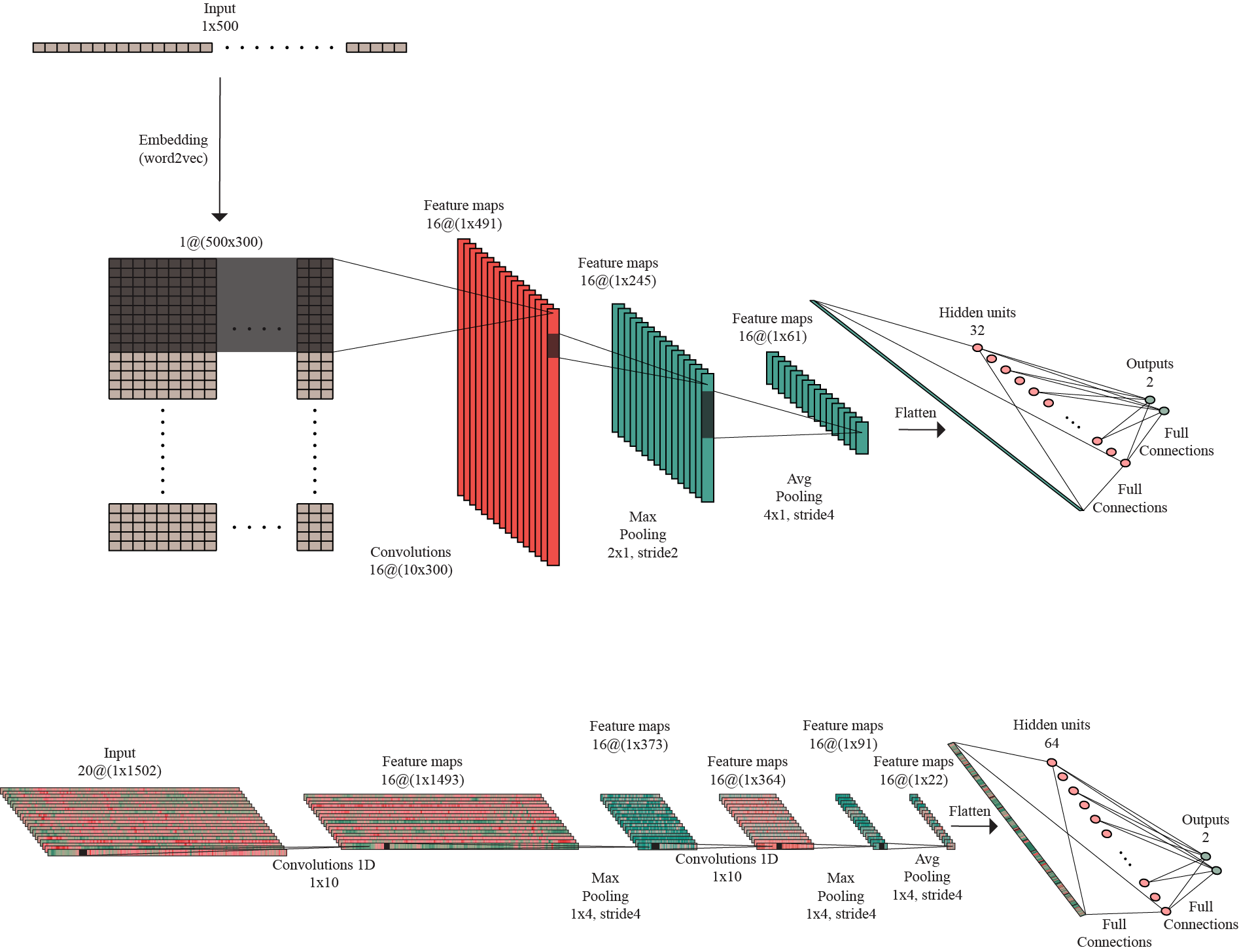

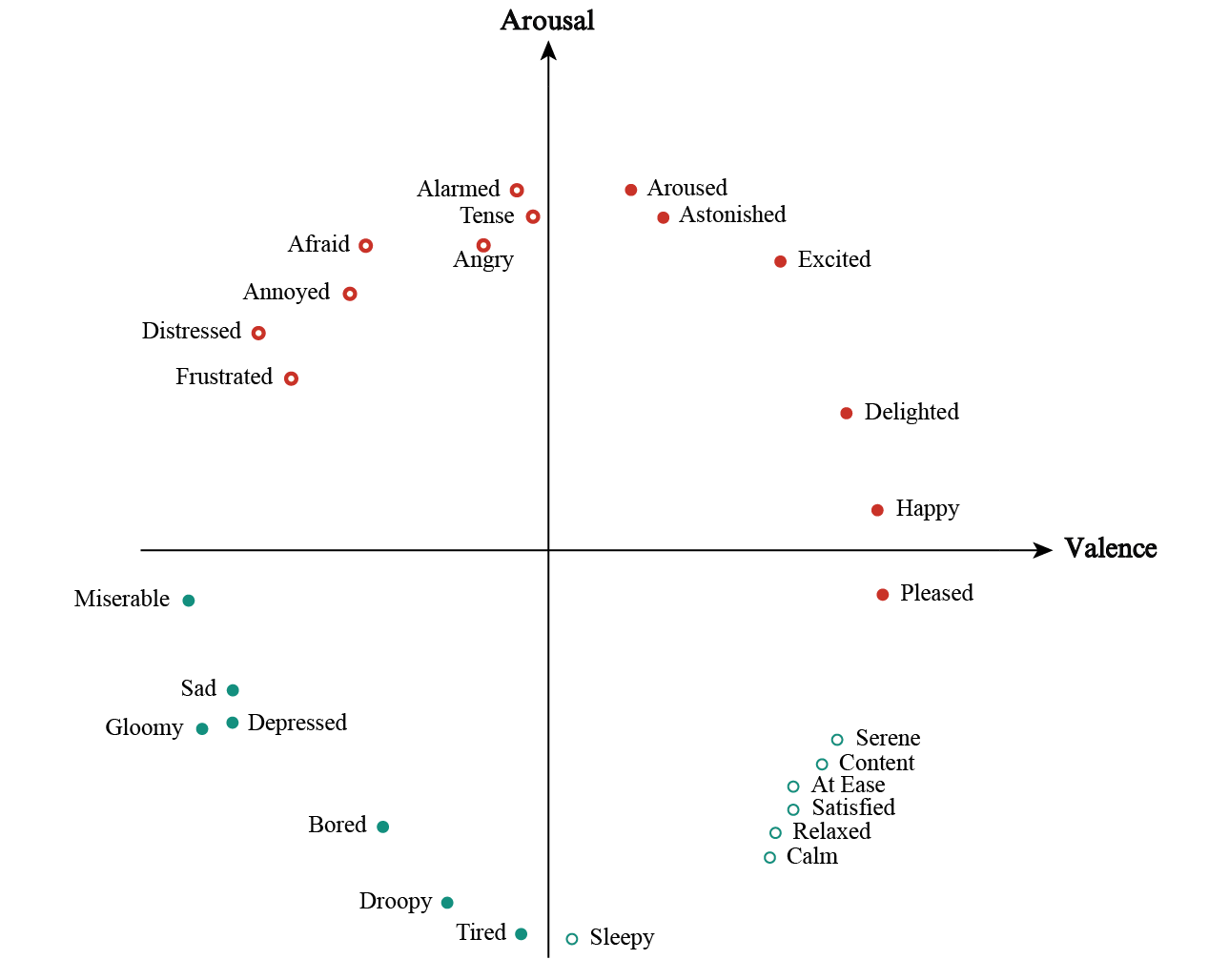

From studying the timeline described by humans when listening to music, emerged the necessity of creating a new dataset, to provide support for recognizing emotions not only from audio features, but also from lyrics and comments features, with annotations extracted from social tags.